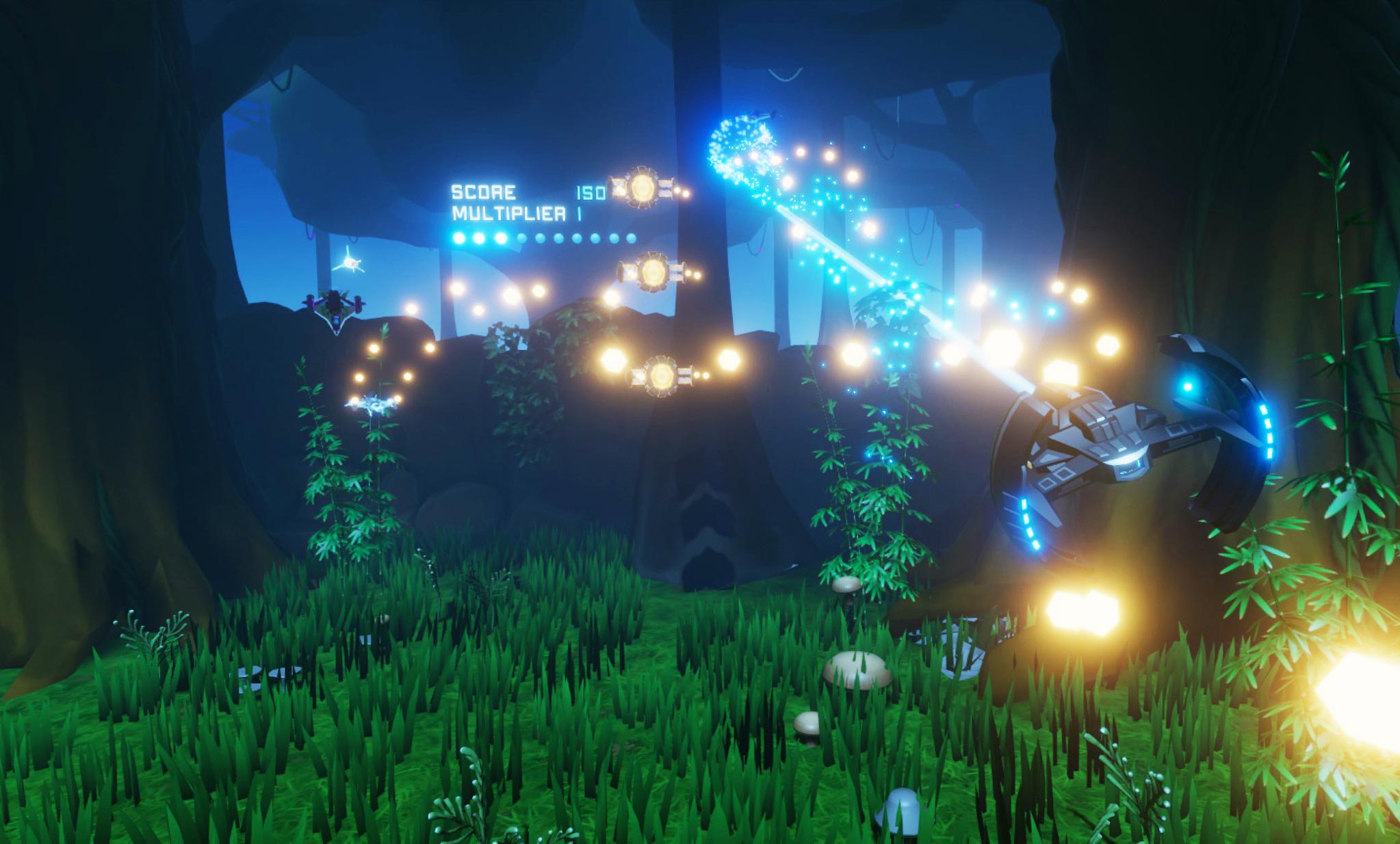

AudioStorm is an upcoming music-driven VR game that combines the rhythm and bullet-hell genres. This is my experiment for testing out the new Lightweight render pipeline for targeting the ‘next gen’ of mobile VR, e.g the Oculus Quest.

Audio storm releases on Steam July 8th.

On a technical level this game is all about performance. It’s essentially a platform to throw hundreds of projectiles and ships at the player in realtime in VR. One of the initial development goals is to have CPU usage be low enough that the game could run adequately on the Oculus Quest and that meant making some smart compromises for performance.

- Instanced Rendering

- Unity Timeline for ship positioning

- VFX Graph for particle effects

- Ship pooling

- Fun AI

Timeline

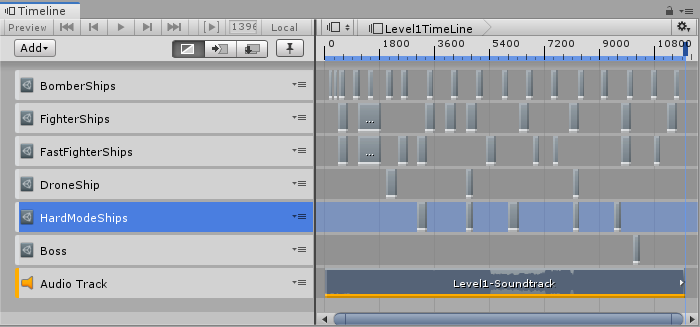

For a good rhythm experience the events on-screen are ‘mapped’ by hand to synchronise with the beat. My challenge was to implement this functionality quickly within Unity. I settled on using the Timeline feature introduced in 2017.1. This tool is mainly advertised for composing cut-scenes like in Unity’s Book of the Dead. You’d be forgiven given Unitys push into the film-making industry that this is all it was good for.

Fortunately timeline be can extend to implement ‘custom playables’. What that means to a programmer is that you can use timeline to run arbitery code in-sequence. This is what the timeline for a song in SeatStorm looks like.

Enemy spawns synchronized to music in Timeline

To a degree you can also control state in timeline via disabling / enabling tracks. I use this to control the difficulty of game. When a player is doing well I can up the difficulty be enabling a ‘hard mode’ track with extra ship spawns.

Performance optimization

With so many AI ships spawning and de-spawning to the beat of the music it was of course going to lead to initialization performance spikes and dropped frames as a result of Unity’s Garbage collection. When object pooling was implemented the issue was largely resolved. Additionally it cannot be said enough the importance of pre-warming your pool in VR, just instantiating agents tends to be CPU heavy and you don’t want that eating into your frame-budget.

For rendering hundreds of projectiles DrawMeshInstanced can be used to display them in a single batched operation sent to the GPU. While you could just rely on the “Enable Instancing” check-box in the inspector DrawMeshInstanced allows you to directly operate on an array of transforms without the overhead of hundreds of GameObjects in the scene.

Effects

Since BeatStorm is built on Lightweight Render Pipeline the opportunity is there to employ the powerful new VFX Graph and ShaderGraph. The grass and foliage effects were built using ShaderGraph and synced with events in-game. When things get intense the wind picks up and the grass takes on a bioluminescence, heightening the the tension:

Technical Conclusions

Keeping in mind this game was designed to use some of the latest Unity tech with limited hardware in mind:

- For mobile VR LWRP is definitly the way to go, the drawcall reduction is huge. The downside is that its graphical fidelity is capped if you want to release on a tethered system. I suspect that for VR titles targeting mobile & high end the cost of shipping a variant with Standard or HDRP may not be worth it. Shaderlab shaders, lighting setups, material setups all have require require a second version.

- In the future VR Projects with a large number of “objects” may want to consider using DOTS to take advantage of the Quests 3 available cores. It’s worth in mind that Unity used DOTS to showcase their megacity demo on a phone.

- Creating custom playables for timeline is a quick win for general sequencing tasks and gives designers a familiar interface to work with.